CBS News

Woman shot dead while walking on trail in Nashville; suspect recognized as twin brother from different case

A woman was shot to death while walking on a trail in Nashville, and police said they arrested her suspected killer Tuesday and charged the man with her murder. To identify him, authorities followed an unusual trail of clues that ended with one detective recognizing the suspect as the identical twin of someone involved in a case three years ago.

Alyssa Lokits, 34, was found dead from a gunshot wound Monday in an overgrown area of the Mill Creek Greenway, a nature trail in the Antioch neighborhood, the Nashville Police Department said. Their investigation revealed Lokits was exercising on the trail when a man emerged from between two parked cars and started to follow her.

Witnesses reported hearing her scream, “Help! He’s trying to rape me,” before hearing gunfire, according to police. The suspect was apparently seen where Lokits’ bonear dy was found, and, shortly after, returning to his own car with scratches on his arms and blood on his clothing.

The suspect was seen following Lokits in video footage that also showed him returning to his car with cuts and bloodied clothing. Nashville Police said the footage came from the dash camera of a local resident whose car was parked in that area. The footage gave police clear images of the suspect and his vehicle, a BMW sedan, while a witness partially recalled his license plate number, they said.

A homicide detective reviewed the images Tuesday morning and identified the suspect, after recognizing him as the identical twin brother from a suicide case that she had worked in December 2021, police said.

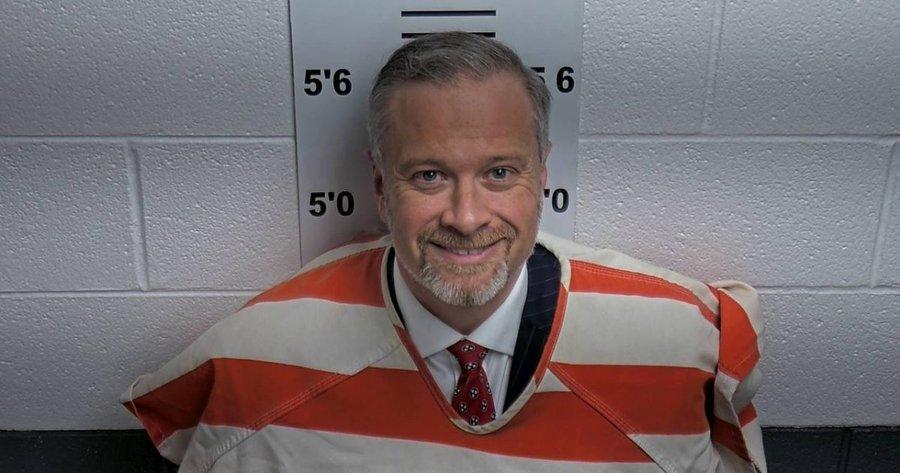

Detectives with the police department’s homicide and special investigations division issued an arrest warrant charging the suspect, 29-year-old Paul Park, with criminal homicide.

Nashville Police

Officers had set up surveillance at Park’s home and took him into custody as soon as the warrant came down. They stopped him while he was out driving, just miles from the greenway where Lokits was killed.

Park was booked on the homicide charge and is currently being held in the Davidson County jail, records show. He is scheduled to make his first court appearance Thursday.

Lokits lived near the greenway and worked in IT and cybersecurity, CBS News affiliate WTVF reported. She was shot once in the head and rushed to Vanderbilt University Medical Center, where she died, according to the station.

CBS News

Supreme Court agrees to hear case about TikTok ban

Watch CBS News

Be the first to know

Get browser notifications for breaking news, live events, and exclusive reporting.

CBS News

Teen victim of AI-generated “deepfake pornography” urges Congress to pass “Take It Down Act”

Anna McAdams has always kept a close eye on her 15-year-old daughter Elliston Berry’s life online. So it was hard to come to terms with what happened 15 months ago on the Monday morning after Homecoming in Aledo, Texas.

A classmate took a picture from Elliston’s Instagram, ran it through an artificial intelligence program that appeared to remove her dress and then sent around the digitally altered image on Snapchat.

“She came into our bedroom crying, just going, ‘Mom, you won’t believe what just happened,'” McAdams said.

Last year, there were more than 21,000 deepfake pornographic videos online — up more than 460% over the year prior. The manipulated content is proliferating on the internet as websites make disturbing pitches — like one service that asks, “Have someone to undress?”

“I had PSAT testing and I had volleyball games,” Elliston said. “And the last thing I need to focus and worry about is fake nudes of mine going around the school. Those images were up and floating around Snapchat for nine months.”

In San Francisco, Chief Deputy City Attorney Yvonne Mere was starting to hear stories similar to Elliston’s — which hit home.

“It could have easily been my daughter,” Mere said.

The San Francisco City Attorney’s office is now suing the owners of 16 websites that create “deepfake nudes,” where artificial intelligence is used to turn non-explicit photos of adults and children into pornography.

“This case is not about tech. It’s not about AI. It’s sexual abuse,” Mere said.

These 16 sites had 200 million visits in just the first six months of the year, according to the lawsuit.

City Attorney David Chiu says the 16 sites in the lawsuit are just the start.

“We’re aware of at least 90 of these websites. So this is a large universe and it needs to be stopped,” Chiu said.

Republican Texas Sen. Ted Cruz is co-sponsoring another angle of attack with Democratic Minnesota Sen. Amy Klochubar. The Take It Down Act would require social media companies and websites to remove non-consensual, pornographic images created with AI.

“It puts a legal obligation on any tech platform — you must take it down and take it down immediately,” Cruz said.

The bill passed the Senate this month and is now attached to a larger government funding bill awaiting a House vote.

In a statement, a spokesperson for Snap told CBS News: “We care deeply about the safety and well-being of our community. Sharing nude images, including of minors, whether real or AI-generated, is a clear violation of our Community Guidelines. We have efficient mechanisms for reporting this kind of content, which is why we’re so disheartened to hear stories from families who felt that their concerns went unattended. We have a zero tolerance policy for such content and, as indicated in our latest transparency report, we act quickly to address it once reported.”

Elliston says she’s now focused on the present and is urging Congress to pass the bill.

“I can’t go back and redo what he did, but instead, I can prevent this from happening to other people,” Elliston said.

CBS News

Lawmakers target AI-generated “deepfake pornography”

Watch CBS News

Be the first to know

Get browser notifications for breaking news, live events, and exclusive reporting.