CBS News

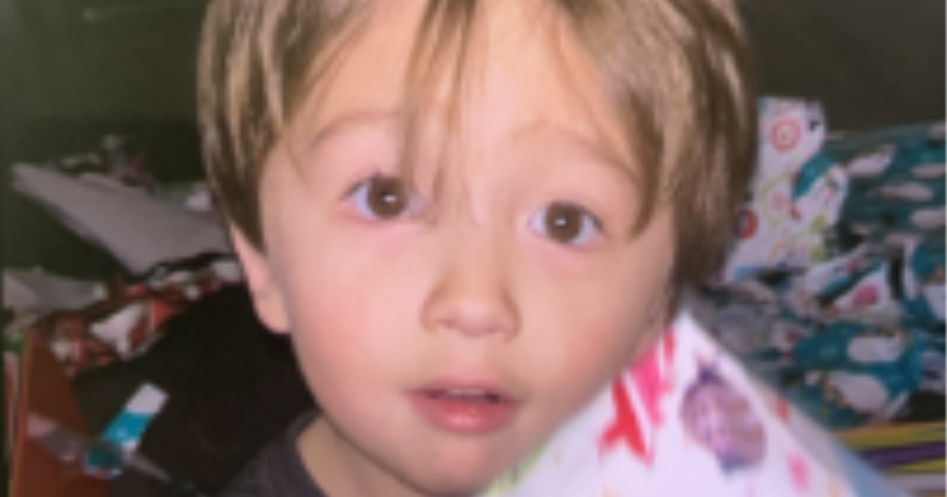

Missing toddler Elijah Vue’s blanket found weeks after disappearance

The blanket of a missing Wisconsin toddler was found weeks after his disappearance, local police said on Monday.

The Two Rivers Police Department said that a red and white plaid blanket found earlier in this investigation was confirmed to belong to Elijah Vue, a Wisconsin 3-year-old who went missing on February 20. The blanket was found about 3.7 miles from where Elijah was last seen, the department said in a news release.

An initial description of Elijah said he was last seen wearing gray pants, a long-sleeved dark shirt, and red and green dinosaur shoes, according to CBS affiliate WDJT. He might have been carrying the blanket, WDJT reported.

Vue’s mother Katrina Baur and another man, Jesse Vang, were arrested and charged with child neglect on Feb. 21. WDJT reported that local authorities said that Baur handed Elijah over to Vang for “discipline.” The Vue family told the station that they don’t know how Baur knew Vang, who served six years in prison for the distribution of methamphetamine. WDJT later described Vang as Baur’s boyfriend.

It was Vang’s apartment that Elijah disappeared from, police said, and Vang who reported him missing.

Supplied to CBS 2

“(Baur) intentionally sent that child for disciplinary reasons for than a week to (Vang’s) residence,” Manitowoc County District Attorney Jacalyn LaBre said in a court hearing on Feb. 23. “She was aware of the tactics used and the lack of care provided. This was an intentional thing by her.”

Both Baur and Vang maintain that they had nothing to do with Elijah’s disappearance, WDJT reported. They will next appear in court this week. Baur will be arraigned on Friday, while Vang’s preliminary hearing will be held on Thursday, police said. Both remain held in Mantiwoc County Jail, according to online records.

Police have taken possession of a vehicle that they said was identified during the investigation. The car, a four-door 1997 Nissan Altima, has Wisconsin plates beginning with “A” and ending with “0.” The car was not owned by Baur or Vang, police said.

“Our interest is not with the current owner of the vehicle, only in the camera footage captured on February 19, 2024, between the hours of 2:00 PM – 9:00 PM,” the department said.

Anyone with information leading to the discovery of the toddler may be eligible for a reward of up to $40,000, police said.

Multiple agencies have been searching for Elijah since he was reported missing. The Two River Police Department has asked all members of the public to keep an eye out for the toddler and check all urban and rural areas, including water, to help find him or any evidence related to his disappearance. Officials have searched storm sewers, landfills, rivers and more, police said.

Elijah’s uncle, Orson Vue, told WDJT that the family has been drained by the search effort but have been touched to see strangers organize searches for the toddler.

“It means everything, to be honest,” Vue said. “If it was just me and my family doing this search, I don’t know what we’d even do. With the whole world, really, reaching out, giving their support, their love, it means the world, and we couldn’t do it without them.”

CBS News

Supreme Court agrees to hear case about TikTok ban

Watch CBS News

Be the first to know

Get browser notifications for breaking news, live events, and exclusive reporting.

CBS News

Teen victim of AI-generated “deepfake pornography” urges Congress to pass “Take It Down Act”

Anna McAdams has always kept a close eye on her 15-year-old daughter Elliston Berry’s life online. So it was hard to come to terms with what happened 15 months ago on the Monday morning after Homecoming in Aledo, Texas.

A classmate took a picture from Elliston’s Instagram, ran it through an artificial intelligence program that appeared to remove her dress and then sent around the digitally altered image on Snapchat.

“She came into our bedroom crying, just going, ‘Mom, you won’t believe what just happened,'” McAdams said.

Last year, there were more than 21,000 deepfake pornographic videos online — up more than 460% over the year prior. The manipulated content is proliferating on the internet as websites make disturbing pitches — like one service that asks, “Have someone to undress?”

“I had PSAT testing and I had volleyball games,” Elliston said. “And the last thing I need to focus and worry about is fake nudes of mine going around the school. Those images were up and floating around Snapchat for nine months.”

In San Francisco, Chief Deputy City Attorney Yvonne Mere was starting to hear stories similar to Elliston’s — which hit home.

“It could have easily been my daughter,” Mere said.

The San Francisco City Attorney’s office is now suing the owners of 16 websites that create “deepfake nudes,” where artificial intelligence is used to turn non-explicit photos of adults and children into pornography.

“This case is not about tech. It’s not about AI. It’s sexual abuse,” Mere said.

These 16 sites had 200 million visits in just the first six months of the year, according to the lawsuit.

City Attorney David Chiu says the 16 sites in the lawsuit are just the start.

“We’re aware of at least 90 of these websites. So this is a large universe and it needs to be stopped,” Chiu said.

Republican Texas Sen. Ted Cruz is co-sponsoring another angle of attack with Democratic Minnesota Sen. Amy Klochubar. The Take It Down Act would require social media companies and websites to remove non-consensual, pornographic images created with AI.

“It puts a legal obligation on any tech platform — you must take it down and take it down immediately,” Cruz said.

The bill passed the Senate this month and is now attached to a larger government funding bill awaiting a House vote.

In a statement, a spokesperson for Snap told CBS News: “We care deeply about the safety and well-being of our community. Sharing nude images, including of minors, whether real or AI-generated, is a clear violation of our Community Guidelines. We have efficient mechanisms for reporting this kind of content, which is why we’re so disheartened to hear stories from families who felt that their concerns went unattended. We have a zero tolerance policy for such content and, as indicated in our latest transparency report, we act quickly to address it once reported.”

Elliston says she’s now focused on the present and is urging Congress to pass the bill.

“I can’t go back and redo what he did, but instead, I can prevent this from happening to other people,” Elliston said.

CBS News

Lawmakers target AI-generated “deepfake pornography”

Watch CBS News

Be the first to know

Get browser notifications for breaking news, live events, and exclusive reporting.